Audiosync

GitHub linkWhen I was in high school we were making a movie and were using an external audio recorder to get better audio quality than the camera's internal mic. When editing it was quite tedious to sort the files by scene and shot and to syncronize the external audio with the video.

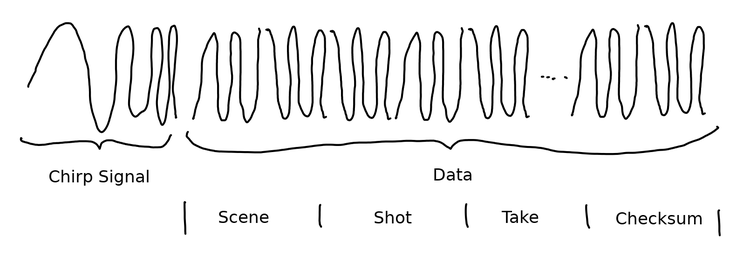

I had the idea to build an app that encodes the scene, shot and take into sound that is recorded by both the cameras internal mic and the external recorder. Then I could later extract that information on the PC and use the timing of the sound in the whole recording to sync the two files.

I learned about different methods to encode data into sound. I found this report which I used to implement binary phase shift keying. To detect the beginning of the signal I looked at the amplitude of the carrier frequency over time and selected the first point where it exceeded some threshold. This was very unreliable though.

I asked a question on how to solve this on stack exchange which helped me find a working solution: I added a chirp to the beginning of the signal and looked for the position of this chirp in the recording using cross-correlation.

Using the naive implementation the cross-correlation was very slow though for long audio files. Searching a bit further I found a solution: The (fast) fourier transform can be used to speed up the cross-correlation.

What was really cool about this project was that it seemed quite difficult and above my mathematical abilities in the beginning, but with research and experimentation I managed to find solutions for the problems I encountered. I got it to a working prototype stage, but have not continued working on it since because I did not have a movie project where I wanted to use it again.